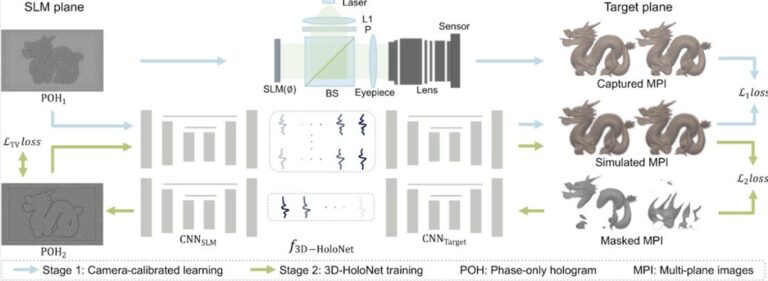

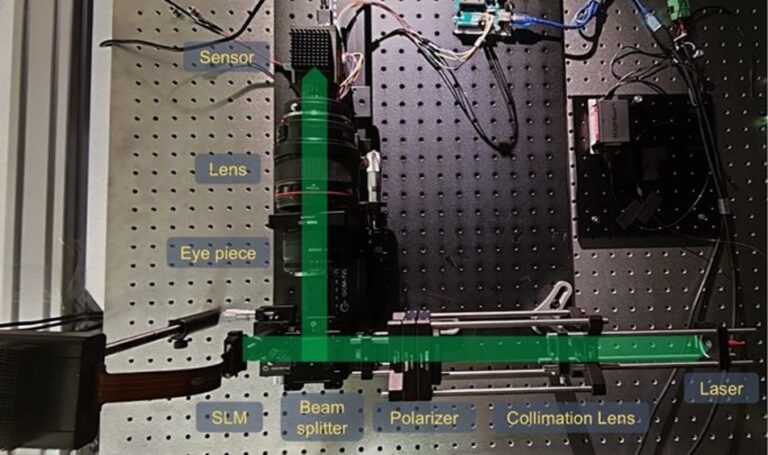

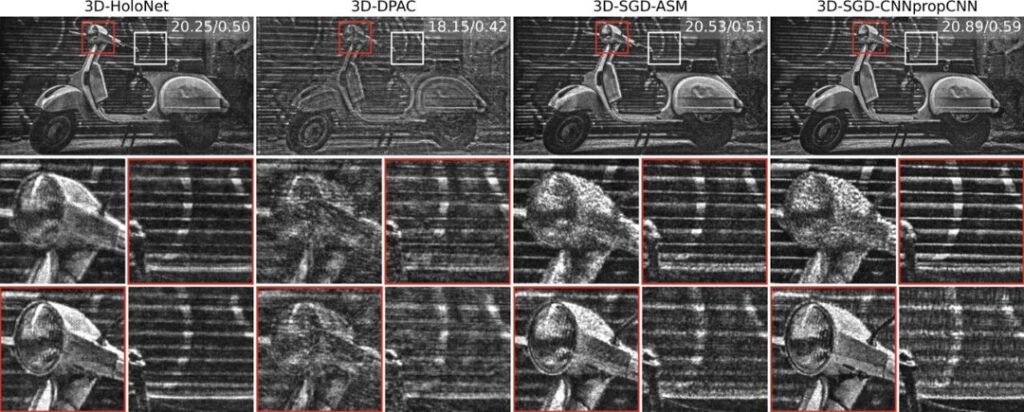

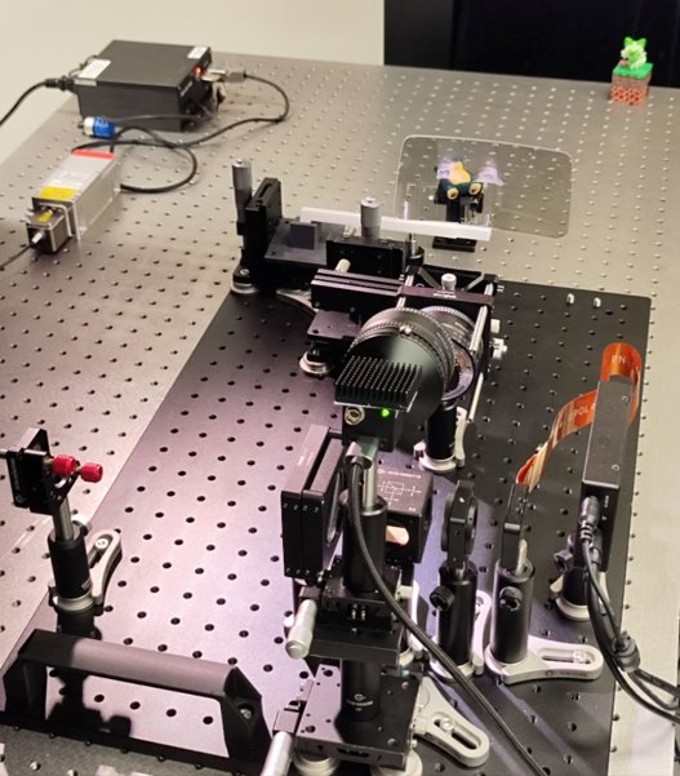

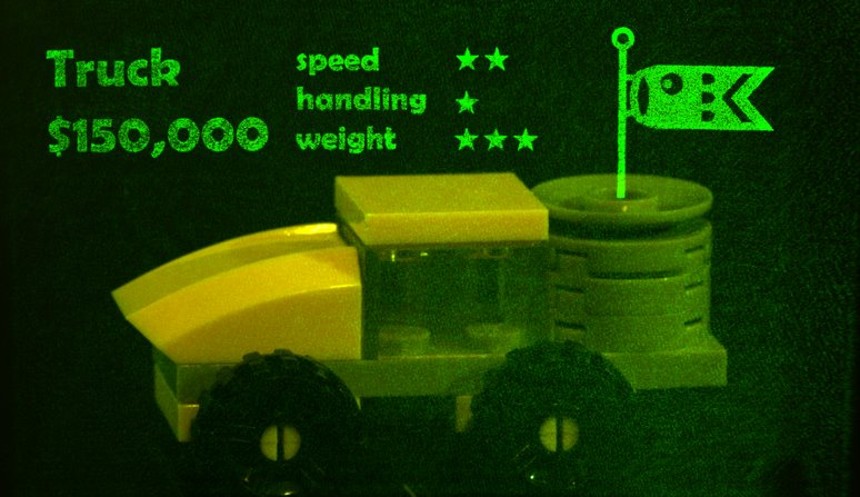

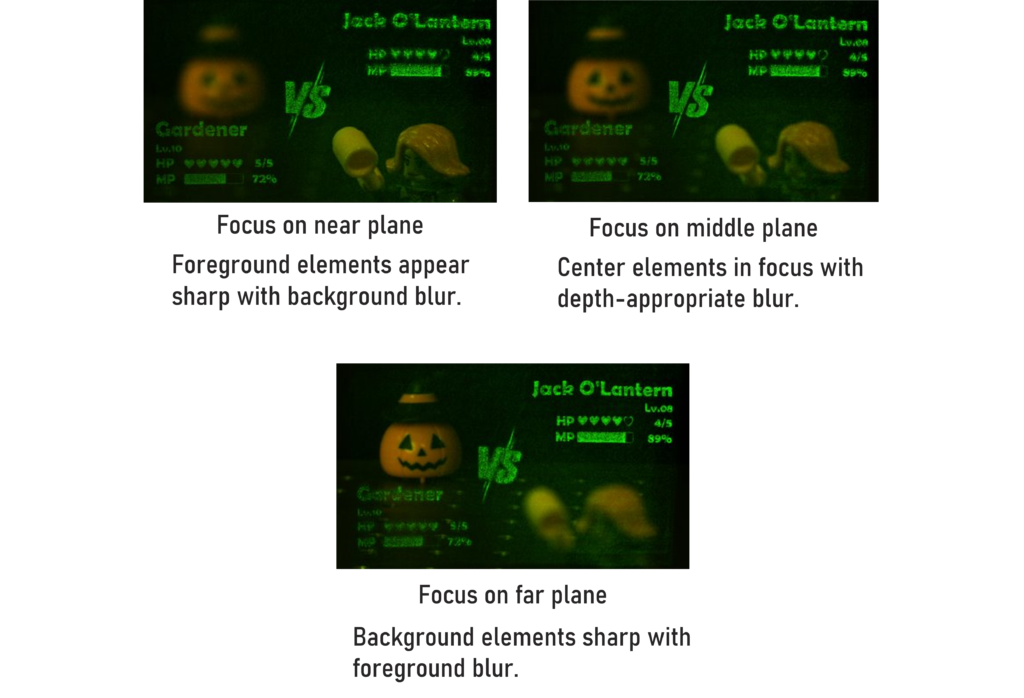

Exploring Mixed Reality Presentation with Holographic Near-eye and Head-up Displays

This project is showcased in the fifth exhibition – Technologies and Innovations.

Principal Investigator: Professor Yifan Evan PENG ( Assistant Professor from Department of Electrical and Electronic Engineering)