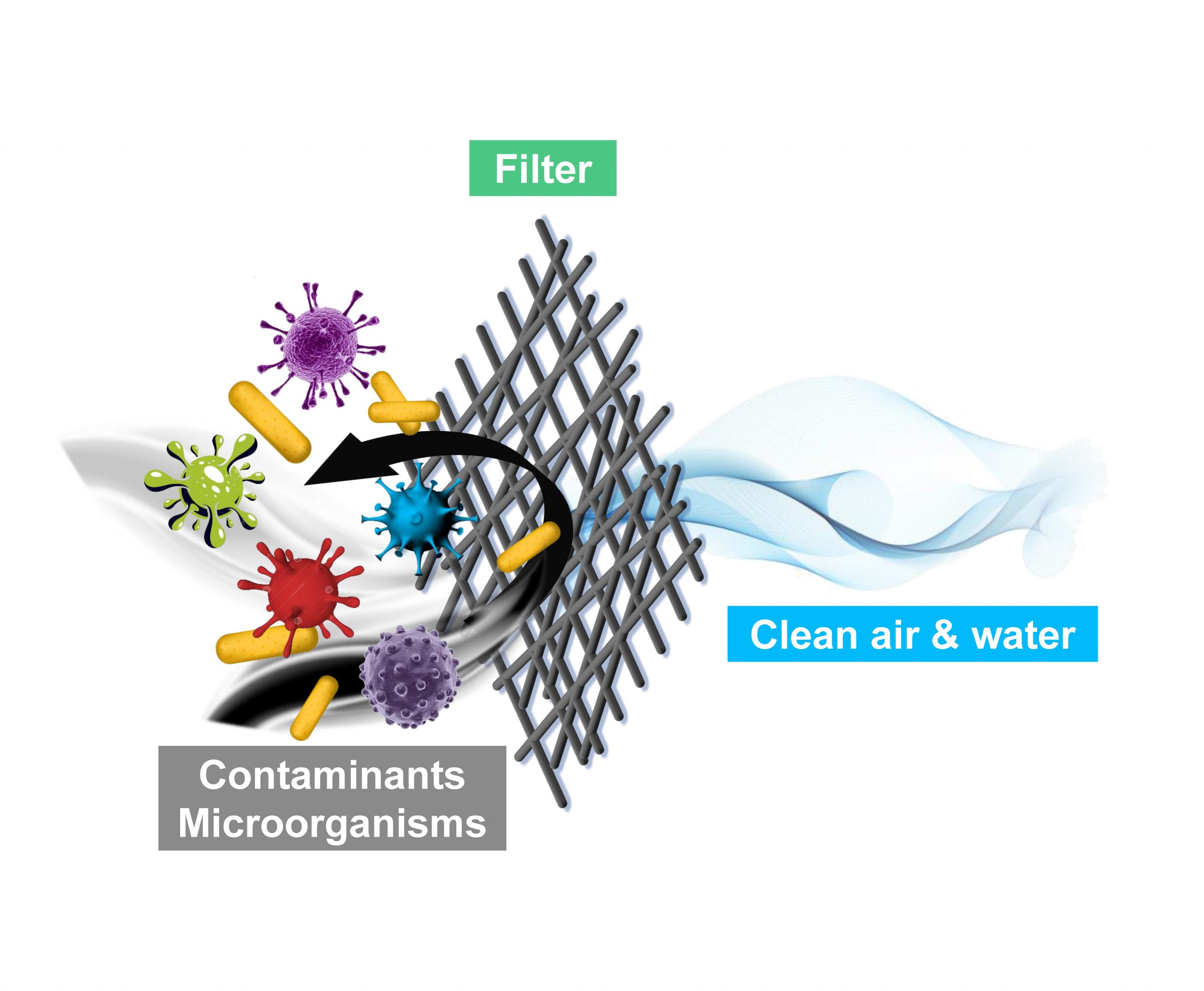

Multifunctional Filters for Protecting Public Health

Clean water and clean air are vital for public health. This project focuses on developing high-efficiency and environmentally sustainable filters for removing harmful air/water pollutants. The team has developed novel architectures and functionalities for the filters to achieve high permeance, high removal efficiency, and excellent reusability.